As we expected, WWDC 2025 – mainly the opening keynote – came and went without a formal update on Siri. Apple is still working on the AI-infused update, which is essentially a much more personable and actionable virtual assistant. TechRadar’s Editor at Large, Lance Ulanoff, broke down the specifics of what’s causing the delay after a conversation with Craig Federighi, here.

Now, even without the AI-infused Siri, Apple did deliver a pretty significant upgrade to the Apple Intelligence, but it’s not necessarily in the spot you’d think. It’s giving Visual Intelligence – a feature exclusive to the iPhone 16 family, iPhone 15 Pro, or iPhone 15 Pro Max – an upgrade as it gains on-screen awareness and a new way to search, all housed within the power of a screenshot.

It’s a companion feature to the original set of Visual Intelligence know how – a long press on the Camera Control button (or customizing the Action Button on the 15 Pro) pulls up a live view of your iPhone’s camera and the ability to take a shot, as well as “Ask” or “Search” for what your iPhone sees.

It’s kind of a more basic version of Google Lens, in that you can identify plants, pets, and search visually. Much of that won’t change with iOS 26, but you’ll be able to use Visual Intelligence for screenshots. Following a brief demo at WWDC 2025, I’m eager to use it again.

Visual Intelligence makes screenshots a lot more actionable, and could potentially save you space on your iPhone … especially if your Photos app is anything like mine and filled with screenshots. The big effort on Apple’s part here is that this gives us a taste of on-screen awareness.

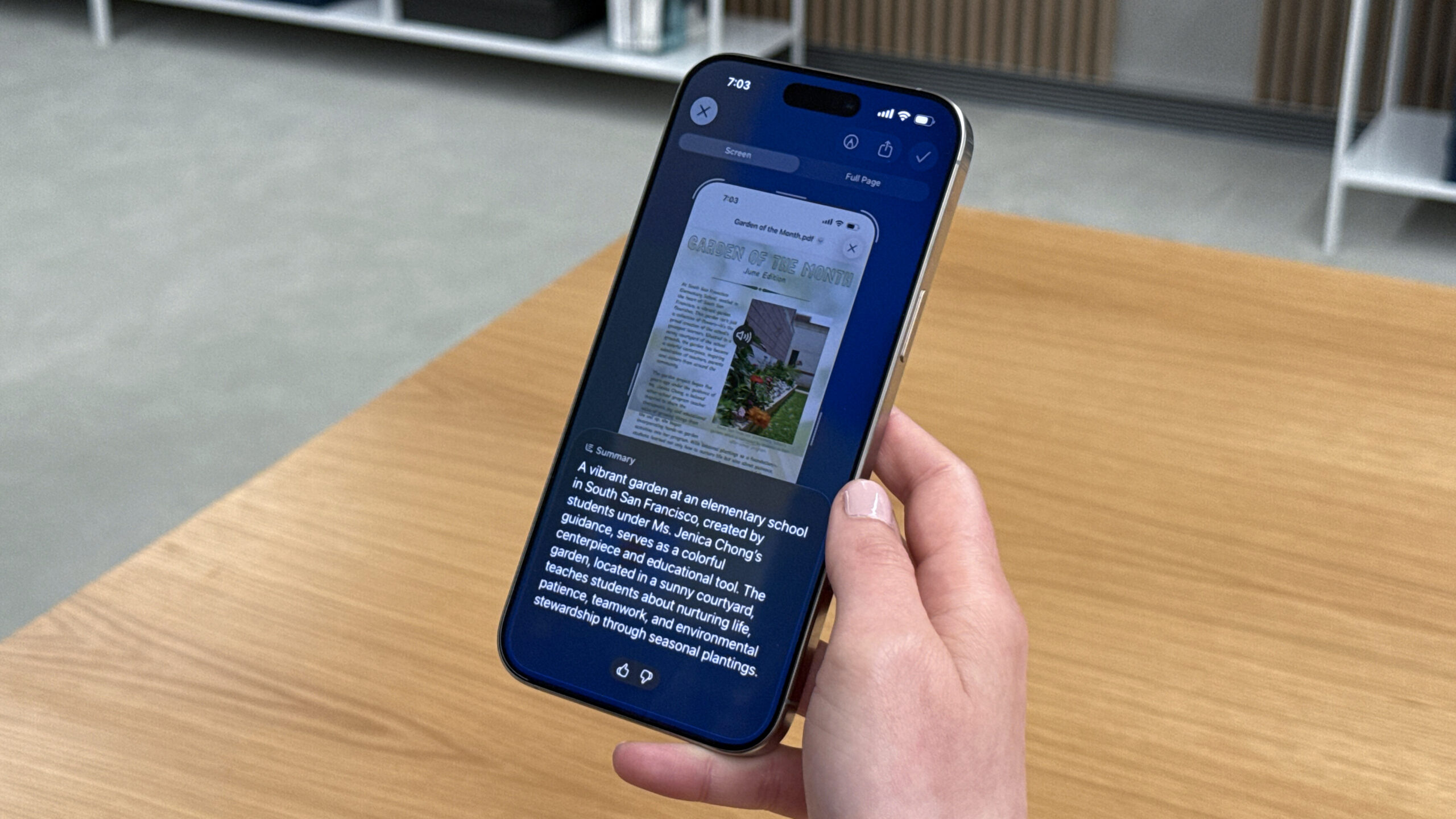

Screenshotting a Messages chat with a poster for an upcoming Movie night in the demo I saw revealed a glimpse of the new interface. It’s the iPhone’s classic screenshot interface, but on the bottom left is the familiar “Ask,” and “Search” is on the right, while in the middle is a suggestion from Apple Intelligence that can vary based on whatever you screenshot.

In this case, it was “Add to Calendar,” allowing me to easily create an invite with the name of the movie night on the right date and time, as well as the location. Essentially, it’s identifying the elements in the screenshot and extracting the relevant information.

Pretty neat! Rather than just taking a screenshot of the image, you can have an actionable event added to your calendar in mere seconds. It also bakes in functionality that I think a lot of iPhone owners will appreciate – even if Android phones like the best Pixels or the Galaxy S25 Ultra could have done this for a while.

Apple Intelligence will provide these suggestions when it deems them right – that could be for creating an invite or a reminder, as well as translating other languages to your preferred one, summarizing text, or even reading aloud.

All very handy, but let’s say you’re scrolling TikTok or Instagram Reels and see a product – maybe a lovely button down or a poster that catches your eye – Visual Intelligence has a solution for this, and it’s kind of Apple’s answer to ‘Circle to Search’ on Android.

You’ll screenshot, and then after it’s taken, simply scrub over the part of the image you want to search. It’s a similar on-screen effect to when you select an object to remove in Photos ‘Clean Up’, but after that, it will let you search it via Google or ChatGPT. Other apps can also opt in for this API that Apple is making available.

And that’s where this gets pretty exciting – you’ll be able to scroll through all the available places to search, such as Etsy or Amazon. I think this will be a fan-favorite when it ships, though not entirely a reason to go out and buy an iPhone that supports Visual Intelligence … yet, at least.

Additionally, if you’d rather search for just the whole screenshot, that’s where the ‘Ask’ and ‘Search’ buttons come in. With those, you can use either Google or ChatGPT. Beyond the ability to analyze and suggest via screenshots, or search with a selection, Apple’s also expanding the types of things that Visual Intelligence can recognize beyond pets and plants to books, landmarks, and pieces of art.

It wasn’t all available immediately at launch, but Apple is clearly working to expand the capabilities of Visual Intelligence and enhance the feature set of Apple Intelligence. Considering this gives us a glimpse into on-screen awareness, I’m pretty excited.

You might also like

- I spoke to Apple’s software engineering VP for the inside story on how iPadOS 26 finally became a real Mac alternative

- The best value Mac just got even cheaper – the M4 Mini is an absolute bargain at Amazon today

- I just experienced super-smooth Cyberpunk 2077 at Ultra settings on a Mac, but the developers say there’s more to ‘squeeze out’ of Apple Silicon