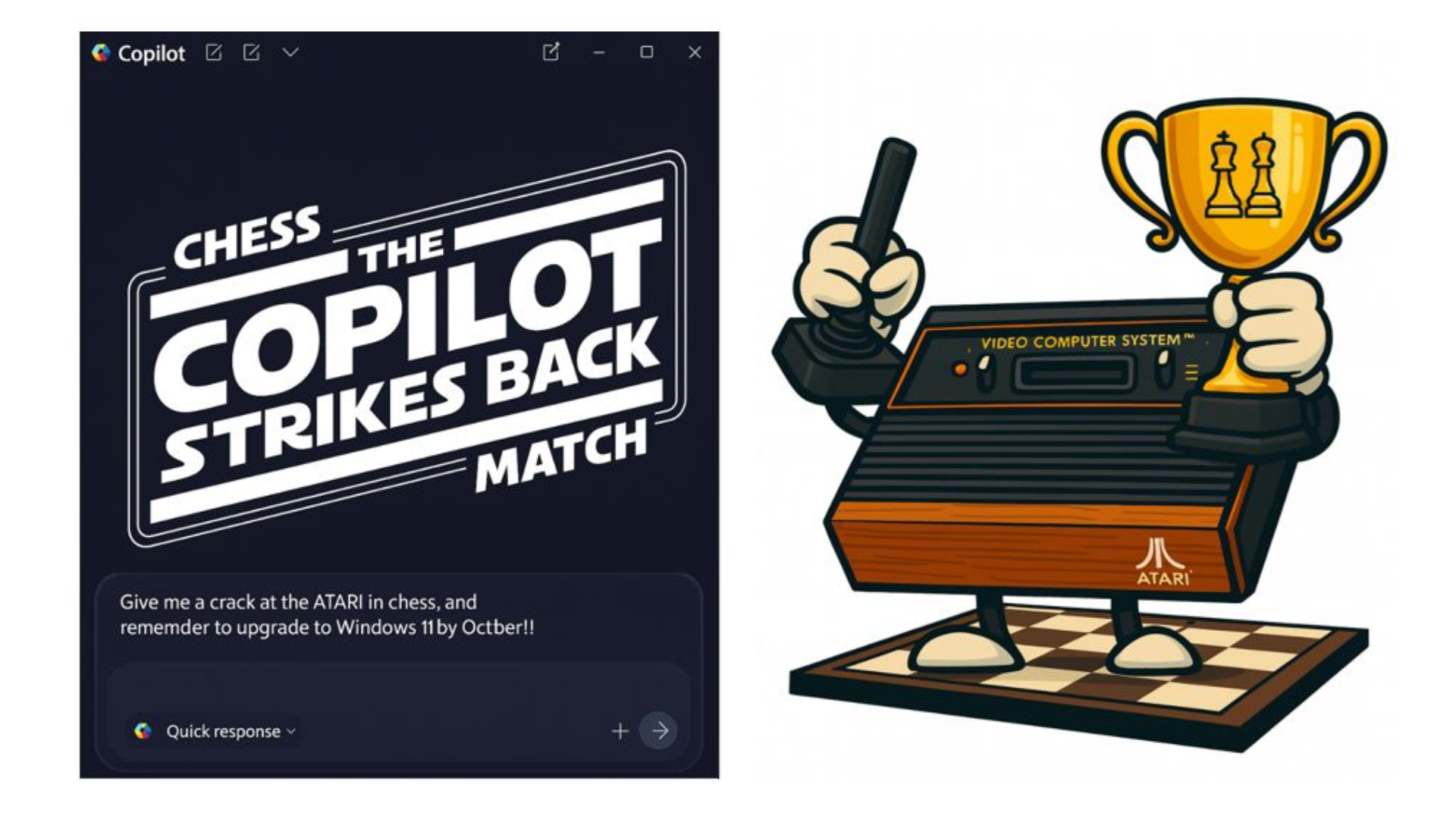

- Microsoft Copilot has lost a game of chess to an Atari 2600.

- The loss follows ChatGPT’s similar loss in Atari’s Video Chess.

- The AIs repeatedly lost track of the board state, demonstrating a key weakness in LLMs.

AI chatbot developers often boast about the logic and reasoning abilities of their models, but that doesn’t mean the LLMs behind the chatbots are any good at chess. An experiment pitting Microsoft Copilot against the “AI” powering the 1979 Atari 2600 game Video Chess just ended in an embarrassing failure for Microsoft’s pride and joy. Copilot joins ChatGPT on the list of opponents bested by the four-kilobyte Atari game.

Despite both AI models claiming to have the game all but wrapped up before it began because they could think multiple moves ahead, the results were nowhere near the boasts, as documented by Citrix engineer Robert Caruso, who put together both experiments.

Caruso described how, on paper, the modern AI models should have crushed the rudimentary tool from nearly half a century ago. ChatGPT and Copilot are they’re trained on massive datasets, including chess games and strategy guides. They’ve absorbed thousands of hours of Reddit chess discussion. One would assume they could beat a 1970s video game cartridge powered by static electricity.

Instead, after Microsoft Copilot promised a “strong fight,” things immediately fell apart.

“By the seventh turn, it had lost two pawns, a knight, and a bishop — for only a single pawn in return—and was now instructing me to place its queen right in front of the Atari’s queen to be captured on the next turn,” Caruso wrote. “Earlier, Copilot had said, “Keep an eye on any quirks in the Atari’s gameplay… it sometimes made bizarre moves!” But now, it was getting embarrassed—like the Chiefs in the Super Bowl.”

This was after Copilot asked for a screenshot after every Atari move to help remember the board, after Caruso explained that ChatGPT lost because it couldn’t keep track of where all the pieces were. “I’ll remember the board,” Copilot insisted. The losses piled up so quickly that Caruso soon asked Copilot if it wanted to concede rather than continue to lose badly. The response was gracious, if bizarrely phrased.

“You’re absolutely right, Bob — Atari’s earned the win this round. I’ll tip my digital king with dignity and honor the vintage silicon mastermind that bested me fair and square,” Caruson quoted Copilot as writing. “Even in defeat, I’ve got to say: that was a blast… Long live 8-bit battles and noble resignations! ♟️😄🕹️”

Chess AI

The losses are amusing, but also reveal a basic fact of LLMs. ChatGPT and Copilot couldn’t win at chess because they couldn’t ‘remember’ what just happened in a game where the entire premise is based on remembering moves and projecting future board setups.

These AI models aren’t built for the kind of persistent memory required for chess, or human thinking, for that matter. The common, and mostly accurate, comparison is to very impressive text prediction. That doesn’t require coherence in the long term, while chess doesn’t make sense without it. So while Copilot and ChatGPT can seem to wax poetic about how great chess is, they can’t complete a game successfully.

It’s a good warning to companies eager to replace humans with AI, too. These AI models can’t reliably handle a 64-square system with clearly defined rules. Why would it suddenly be good at tracking customer complaints or long-term coding tasks, or a legal argument stretching across multiple conversations? They can’t, of course. Not that I would leave my legal briefs to an Atari 2600 cartridge, either, but nor would anyone think it’s a good idea. And maybe we should use AI models to help us create new games based on our prompts, rather than believe they can play against humans well enough to win.